Are LLMs Cheating At Math?

Earlier this month, Apple's AI research team released a paper that tackled a fundamental question that has been at the heart of many debates about AI capabilities: When AI models like ChatGPT solve math problems, are they actually reasoning like humans do?

To find out, they created a new testing framework called GSM-Symbolic, designed to probe the mathematical capabilities of large language models in ways previous benchmarks couldn't.

Their findings challenge some of our assumptions about AI's mathematical prowess and raise important questions about how we should be using these tools.

Why does this matter now?

As language models become increasingly integrated into education, coding, and analytical tools, understanding their true capabilities (and limitations) isn't just academic curiosity – it's crucial for responsible deployment.

Apple's research comes at a time when many are rushing to implement AI in mathematics education and problem-solving applications.

The experiment

The unique framework called GSM-Symbolic generates infinite variations of basic math problems. Think of it like a template system – where a question about "Sophie buying 31 blocks" can become "James buying 45 blocks" or "Maria buying 27 blocks."

This clever design allowed them to test whether AI models truly understand the underlying math concepts or are just good at recognizing patterns they've seen before. The key innovation was being able to systematically change different elements of each problem while keeping the core mathematical logic identical.

This approach revealed something fascinating: when faced with these variations, even the most advanced AI models showed surprising inconsistency in their performance – sometimes dropping accuracy by up to 65% when solving what is essentially the same problem with different numbers.

First, researchers simply changed numbers in basic math. "Split 10 apples between 2 people" became "Split 15 apples between 3 people." Same logic, different numbers – yet the LLM's performance dropped significantly. A human who understands division would solve both easily.

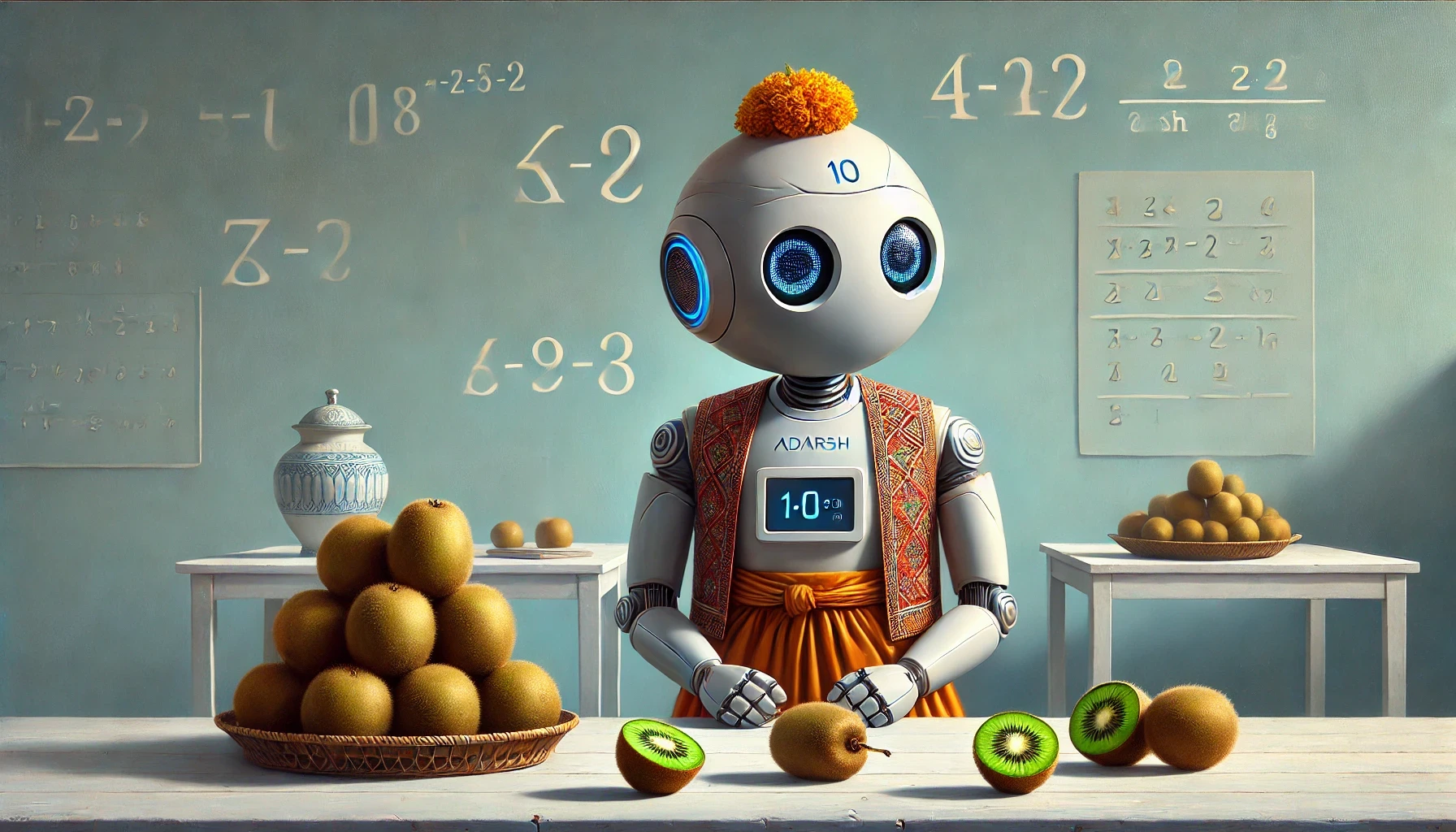

Second, they added irrelevant details to the question. In a problem about counting kiwis over three days (totaling 185), researchers added the phrase "but five of them were smaller than average." The LLM would try to subtract these five kiwis from the total – even though size had nothing to do with the counting.

Third, they tested complexity. Starting with a simple phone bill calculation problem, they gradually added rules like price drops and discounts. With the addition of every new rule, the accuracy significantly decreased, showing how LLMs struggle with layered problems.

The training data dilemma

Many AI companies claim their models are "trained on math" but this research reveals something more nuanced about how that training actually impacts performance.

The way these models learn from their training data turns out to be significantly different from how students learn in a classroom, with important implications for anyone developing or using AI for mathematical tasks.

The study found that when models encountered variations of problems that were slightly different from their training data, their performance dropped sharply, suggesting they're not learning underlying mathematical principles but rather memorizing patterns.

Even the most advanced models showed concerning behavior when numbers in problems were changed while keeping the same mathematical logic, with accuracy dropping by as much as 15-20% just from changing values.

Performance degraded exponentially as problems became more complex, indicating that models struggle to chain together multiple mathematical concepts even when they can handle each concept individually.

Some newer models actually performed worse on certain variations than older models, suggesting that simply having more training data doesn't necessarily lead to better mathematical reasoning.

Does this change how we use AI?

It's not enough to celebrate when models get the right answers – we need to understand how they're arriving at those answers, and what that means for real-world applications. The question isn't whether AI can do math – it clearly can, often impressively well.

The real question is how we can build reliable products that leverage these capabilities while being transparent about their limitations. So what do these findings mean for you?

Here are a few recommendations we think might be helpful

For AI product teams: When implementing mathematical reasoning in your products, build in redundancy checks. Consider having the model solve the same problem with different numerical values or rephrase the question to validate consistency. This can help catch pattern-matching errors before they reach users.

For ML engineers: When fine-tuning models for mathematical tasks, focus on testing with variations of the same problem rather than just expanding your dataset. Standard benchmarks don't tell the whole story about your model's capabilities – consider building internal benchmarks that match your specific use case.

For startup founders: Before marketing your AI product as having "mathematical reasoning capabilities," consider what that really means. There might be opportunities in building specialized tools that excel at specific types of problems rather than trying to solve everything at once. The limitations revealed in this research could actually point to new product opportunities.

For everyday tasks: Hold onto your LLM! It's actually brilliant at simple calculations and logic, or budget-checking for planning a holiday. These are exactly the kinds of clean, structured math problems where AI needs no extra help.

While we often talk about AI "reasoning" or "thinking," what we're actually seeing is a sophisticated but fundamentally different approach to problem-solving. Just as the invention of telescopes didn't replicate human vision but rather expanded it in new ways, AI's approach to mathematics isn't trying to replicate human understanding. The goal is to better understand exactly what we're working with.